The Missing Layer in UX Research: Why Emotion Matters as Much as Usability

- Mohsen Rafiei

- Nov 18, 2025

- 6 min read

For decades, digital product and service design focused on quantifiable performance metrics: clicks, errors, task completion rates, and time on task. These numbers were easy to capture and simple to benchmark, which made them ideal for early UX and HCI work. But as our understanding of human behavior grew, it became clear that these metrics reveal only the surface of the user experience. They tell us what people did, not why they did it or how they felt while doing it, a pattern we have repeatedly observed in our lab (PUXLab) sessions when behavioral success contradicts the emotional realities unfolding beneath it.

Emotion shapes perception, decision-making, attention, memory, and the sense of control users feel when navigating a system. A visually clean interface feels confusing if the user is stressed. A slow-loading screen feels worse when the user is already anxious. Confidence can make a complex interaction feel simple, while frustration can make the simplest task feel impossible. Emotion is the hidden driver beneath behavior, the invisible metric that explains moments where experiences unexpectedly break down or succeed. By testing emotional responses alongside perception and cognition, we gain a unified and truthful picture of how people actually engage with technology.

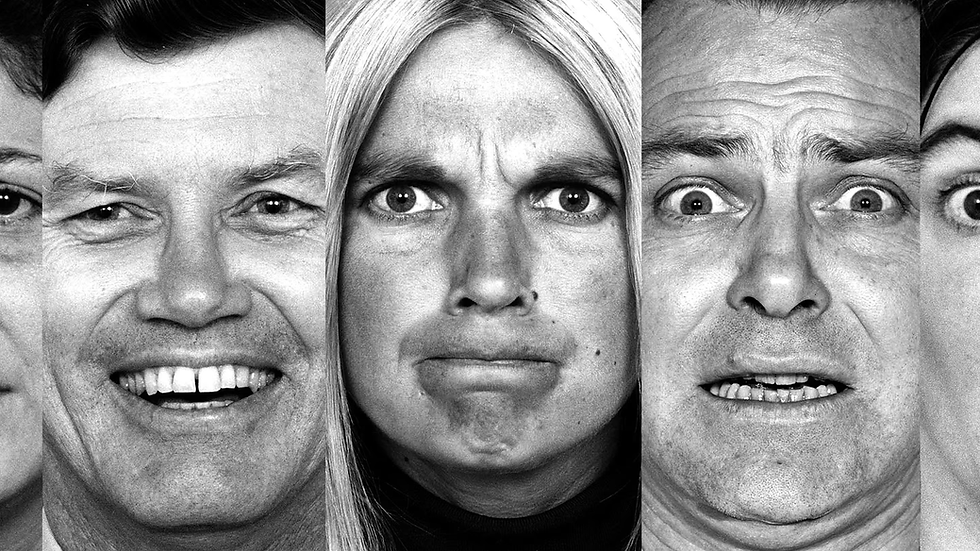

To understand these emotional undercurrents, we need methods that can capture what users feel, what they express, and what their bodies reveal even before they can articulate it themselves. No single measurement can accomplish this on its own. Emotion is a blend of subjective interpretation, subtle facial movements, physiological arousal, and the micro behaviors embedded in everyday interaction. Modern UX and HCI therefore rely on multiple layers of evidence that complement and validate one another. Before exploring advanced sensing strategies, we begin with the most direct window into experience: the user’s own interpretation.

Subjective Scales: The User’s Own Interpretation of Experience

Subjective measures give us access to a user’s conscious interpretation of their emotional state. While self-reports are imperfect, they are essential. Physiological and behavioral signals need a subjective anchor to interpret meaning accurately. Traditional usability questionnaires capture satisfaction and ease of use, but often miss the emotional or experiential qualities of interaction, such as whether a design feels encouraging, overwhelming, trustworthy, or frustrating. More specialized affective instruments help reveal these nuances. Tools that separate pragmatic and emotional qualities make it possible to identify situations where a design is functionally successful but emotionally flat. Rapid non verbal scales allow users to quickly rate pleasure, arousal, and sense of control with minimal cognitive effort, making them ideal for capturing fleeting emotional impressions. Subjective scales are not about trusting users blindly. They are about understanding how users frame their experience in their own terms before deeper behavioral and physiological analysis is applied.

Facial Action Coding: Observing Real Emotional Expression

Facial expressions offer a rich behavioral window into emotional processing. The Facial Action Coding System, or FACS, is the most established method for interpreting facial behavior without making assumptions about emotional meaning. Instead of labeling emotions directly, FACS identifies individual muscle movements known as Action Units that combine to form expressions. This anatomical approach matters. A smile produced only by lifting the cheeks communicates something very different from a smile that also engages the muscles around the eyes. One is polite and deliberate. The other is spontaneous and genuine. Even subtle movements, such as a quick brow knit or a slight tightening of the lips, can signal confusion or frustration well before users verbalize anything. Because FACS is grounded in observable anatomy rather than interpretation, it integrates seamlessly with subjective reports and physiological signals, adding nuance and clarity to user testing especially in emotionally charged moments such as troubleshooting, onboarding, or learning a novel system.

Physiological Biometrics: The Body’s Involuntary Response to Emotion

Physiological signals offer something no interview or survey can: involuntary, moment-by-moment emotional truth. These signals are regulated by the Autonomic Nervous System and cannot be easily manipulated, giving them a unique objectivity.

Electrodermal Activity, or EDA, measures changes in skin conductance related to sweat gland activity. It reflects emotional intensity, showing how strongly the user reacts, but it does not differentiate between positive and negative feelings. This makes EDA an excellent tool for identifying high stress or high excitement moments, especially when aligned with behavioral or gaze activity.

Heart Rate Variability, or HRV, measures the variation between heartbeats and is one of the most sensitive biomarkers of cognitive load and emotional strain. As tasks become mentally demanding or confusing, HRV decreases. Even mild interface delays, unclear instructions, or unexpected navigation paths can produce significant HRV shifts long before the user consciously identifies the source of difficulty. HRV acts as an early warning sign for cognitive overload and performance breakdown.

Other physiological channels such as pupil dilation, breathing patterns, and facial electromyography reveal subtle aspects of emotional and cognitive processing that are difficult to detect through behavior alone. Pupil size increases with mental effort. Breathing becomes shallow under stress. Micro muscle activity can reveal emotional valence even before it appears in visible expression. These channels expose the implicit emotional landscape that self-reports overlook.

Behavioral Proxies: Emotional Signatures Embedded in Interaction

Complex sensors are not always necessary to infer emotional states. Everyday interaction patterns carry emotional signatures that can be measured at scale.

Cursor and mouse dynamics often mirror internal conflict or cognitive strain. Smooth, direct mouse movements suggest confidence. Erratic paths, zigzags, repeated corrections, and prolonged hovering indicate uncertainty or frustration. These subtle behaviors often reflect emotional responses users do not verbalize.

Eye tracking reveals not only where users look but how hard they work to process what they see. Longer fixations indicate confusion or effort. Rapid scanning reflects difficulty locating information. Changes in pupil diameter correspond directly to mental workload. Even small gaze shifts uncover friction points that remain invisible in traditional usability testing.

Interaction logs provide another rich behavioral layer. Scrolling patterns, tapping sequences, repeated backtracking, hesitation pauses, and sudden abandonment all reflect emotional tone. Slow engagement may signal uncertainty. Immediate task abandonment often reveals frustration. Extended pauses can mark cognitive overload. These logs allow emotional insights even when physiological tools are not available.

Multimodal Fusion: Synthesizing a Complete Emotional Profile

Emotion is multidimensional, and no single method captures it fully. The most reliable emotional insight comes from combining multiple data streams such as subjective ratings, facial expression, physiological responses, behavioral patterns, and contextual variables. Multimodal fusion helps resolve ambiguity and extract meaning rather than isolated signals. An elevated heart rate could indicate stress or excitement. Combined with gaze avoidance and cursor instability, it becomes clear the user is overwhelmed. A frown could signal irritation or concentration. With HRV drops and slow scrolling, the story becomes more precise.

Contextual information is essential. Task complexity, system latency, environmental conditions, user familiarity, and device constraints all influence emotional interpretation. When contextual variables are integrated into fusion models, emotional predictions become far more accurate and robust. Multimodal fusion turns fragmented inputs into a coherent emotional narrative.

The Cost of Ignoring Emotional Data

When we rely solely on traditional usability metrics, we overlook the most influential force shaping user experience. Without emotional data, we lose the ability to detect early frustration or cognitive overload, identify emotional barriers that undercut confidence, explain why two users with similar performance metrics have entirely different experiences, design interfaces that respond intelligently to human needs, and recognize opportunities for genuine engagement and trust building. Emotion is not a soft metric. It is a functional and measurable part of human computer interaction. Without it, we design in the dark.

At the end of the day, testing emotion in UX and Human Factors is not about collecting more data. It is about collecting the right data for the specific experience we are trying to understand. Every project requires its own tailored methodological toolkit. A calm onboarding flow may only require subjective scales and interaction logs. A high stakes healthcare interface may depend on HRV and EDA to capture stress. A cognitive training system may use EEG to monitor load. A social or gamified product may lean heavily on FACS and gaze dynamics to detect delight or subtle frustration. There is no universal emotional sensor. Emotion itself is multidimensional and context dependent, which means our methods must be equally adaptable.

When we combine subjective insight, behavioral signatures, and physiological truth, we finally see the complete human picture. Emotion testing reveals the hidden forces shaping perception, learning, and decision making. It uncovers friction users cannot articulate, hesitation logs cannot explain, and stress patterns interviews cannot detect. It allows us to diagnose extraneous cognitive load, fine tune challenge and skill balance, and design interactions that work with rather than against the human nervous system. By treating emotion as a first class metric and selecting tools intentionally for each research question, we move from building interfaces that users can operate to creating experiences they can trust, enjoy, and succeed in. Emotion is the connective tissue that unifies cognition, perception, and behavior. When we measure it rigorously, we design not only for usability but for human well-being.

In our own work at PUXLab, we integrate these methods in combination rather than in isolation. Each study demands its own blend of subjective reports, behavioral signatures, and physiological indicators, and we design our protocols around the specific emotional dynamics we aim to uncover. By grounding our research in real, practical testing scenarios, we are able to see not just how people interact with technology, but how they experience it moment by moment.

Comments